AuraFusion360

AuraFusion360: Augmented Unseen Region Alignment for Reference-based 360° Unbounded Scene Inpainting

The field of 3D scene reconstruction has experienced remarkable advancements with techniques like Neural Radiance Fields and 3D Gaussian Splatting, which have redefined how we capture and render complex environments. Yet, while these methods deliver high-quality visual details, maintaining consistency—especially when it comes to object removal and hole filling in unbounded, 360° scenes—remains a significant challenge. Enter AuraFusion360, a groundbreaking approach that leverages reference-based guidance and innovative Gaussian representations to seamlessly inpaint scenes.

Introducing AuraFusion360

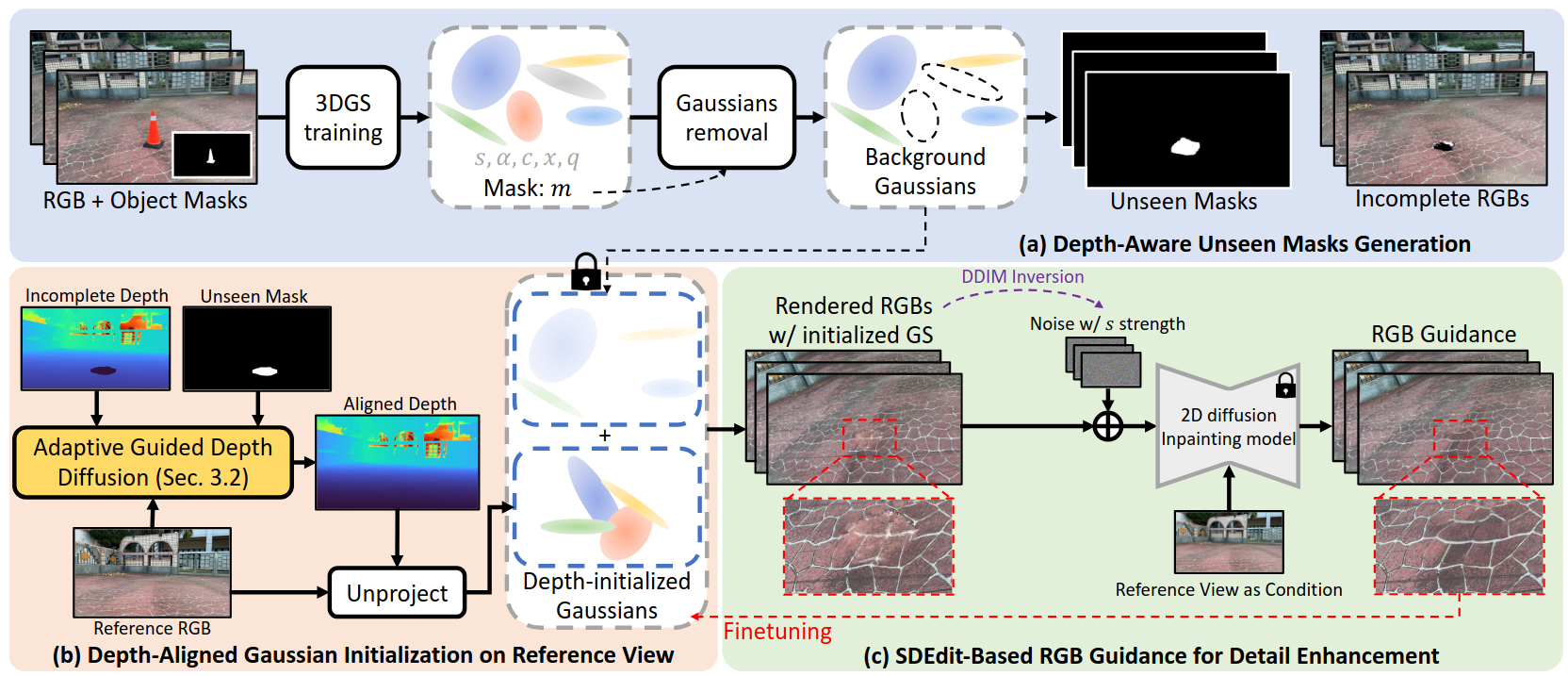

By integrating depth-aware unseen mask generation with adaptive guided depth diffusion, AuraFusion360 not only fills in missing details with stunning precision but also preserves geometric accuracy across varied viewpoints, setting a new standard in 3D scene editing.

How It Works

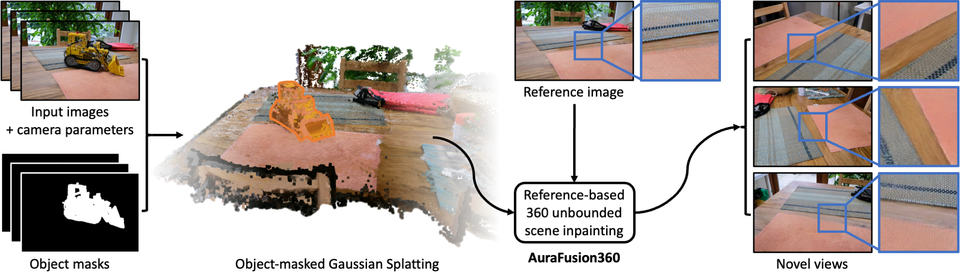

AuraFusion360 works by first converting a set of multi-view images, along with their camera parameters and object masks, into a 3D scene representation using Gaussian Splatting. The process starts with object removal, where masked Gaussians are eliminated to clear unwanted elements. Then, a depth-aware unseen mask is generated by warping depth information across different views, accurately identifying regions that remain occluded. A reference image guides the initialization of new Gaussians in these unseen areas via Adaptive Guided Depth Diffusion, ensuring that the 3D geometry is properly aligned. Finally, SDEdit-based refinement enhances the inpainted details, resulting in a consistent, high-fidelity 360° scene that maintains both geometric accuracy and visual realism.

Results

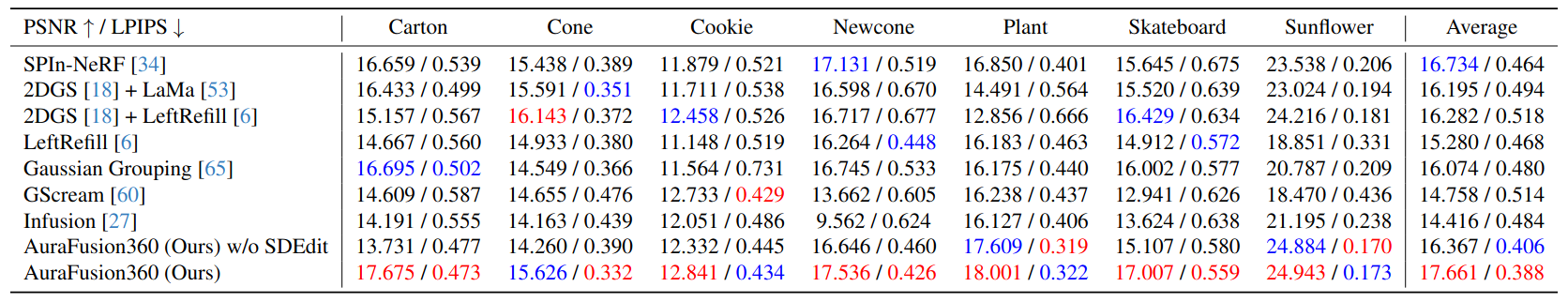

AuraFusion360 achieved strong quantitative metrics, recording high PSNR values and low LPIPS scores that highlight its robust inpainting quality and geometric consistency.

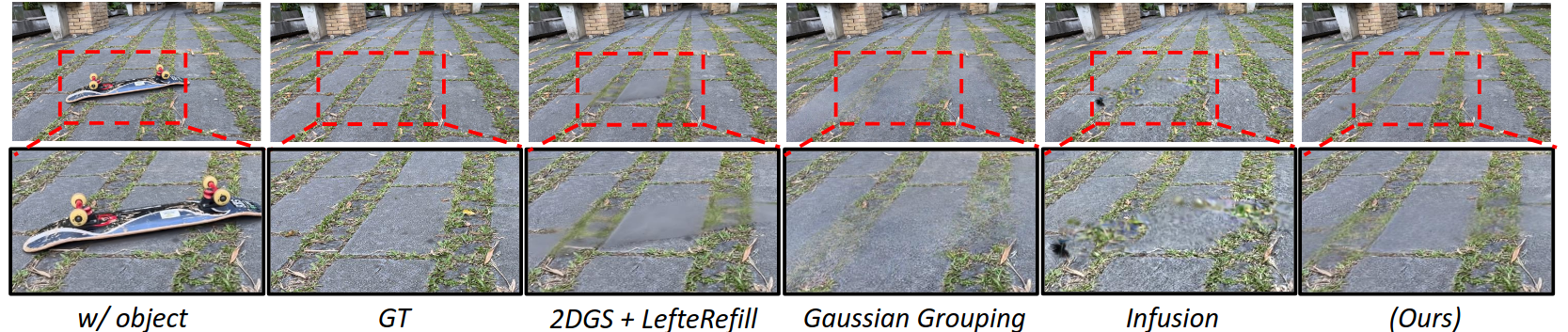

Visually, the method produces seamless inpainted regions that blend naturally into the scene, preserving fine details and overall structure even under dramatic viewpoint variations.

Conclusion

AuraFusion360 is a significant advancement in 3D scene inpainting, addressing the critical challenge of maintaining view consistency and geometric accuracy in unbounded 360° environments. By seamlessly blending inpainted regions with the existing scene, even across dramatic viewpoint changes, AuraFusion360 enables the creation of coherent and realistic reconstructions. Whether applied to virtual reality, architectural visualization, or immersive media, this approach opens up new possibilities for generating high-quality, consistent 3D environments.

References

[1] Chung-Ho Wu, Yang-Jung Chen, Ying-Huan Chen, Jie-Ying Lee, Bo-Hsu Ke, Chun-Wei Tuan Mu, Yi-Chuan Huang, Chin-Yang Lin, Min-Hung Chen, Yen-Yu Lin, and Yu-Lun Liu, “AuraFusion360: Augmented Unseen Region Alignment for Reference-based 360° Unbounded Scene Inpainting,” arXiv preprint arXiv:2502.05176, 2025. [Online]. Available: https://arxiv.org/abs/2502.05176.