Ugly duckling detection using deep learning

The paper "Using deep learning for dermatologist-level detection of suspicious pigmented skin lesions from wide-field images" by L. Soenksen (2021) [1] aims to create a deep learning model capable of identifying suspicious pigmented skin lesions, such as potential melanomas, from wide-field images. This approach leverages the power of convolutional neural networks (CNNs) to perform at a level comparable to experienced dermatologists.

Introduction

In the study, lesion segmentation and feature extraction are crucial steps in the process of detecting suspicious lesions. These steps are essential for isolating individual lesions and extracting meaningful data that the deep learning model can use to differentiate between benign and malignant lesions.

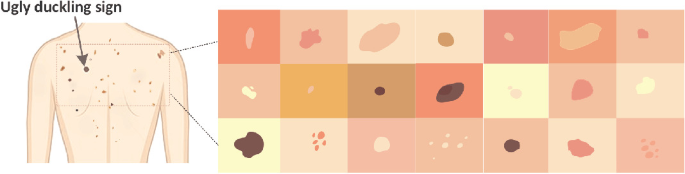

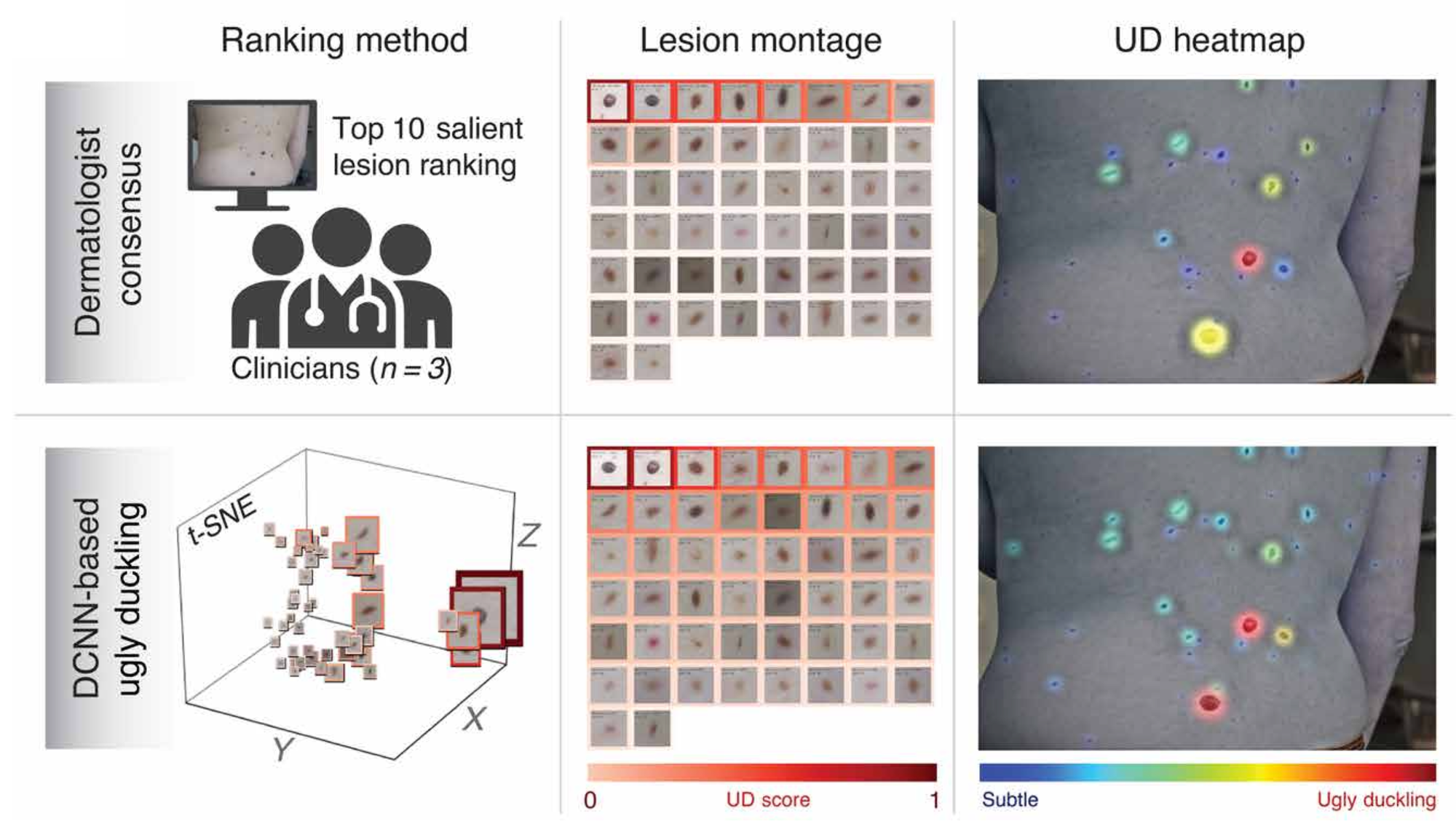

The "ugly duckling" detection method presented in the paper is an innovative approach inspired by a heuristic often used by dermatologists. This heuristic is based on the observation that malignant lesions often stand out as looking different (the "ugly duckling") compared to other benign moles on a patient's skin.

Task

The primary task of the study is to develop a deep learning model that can automatically detect suspicious pigmented skin lesions from wide-field images with accuracy comparable to that of professional dermatologists. The goal is to improve the efficiency and reliability of skin cancer screening by providing a tool that can assist in identifying potential melanomas early on.

The system is designed to generate:

- Marked suspiciousness classification at the single-lesion level,

- Ugly duckling heatmaps showing intra-patient lesion saliencies.

The ugly duckling method focuses on identifying lesions that are atypical when compared to other moles on the same individual. The advantages of this method:

- Unlike traditional methods that analyze lesions in isolation, the ugly duckling method benefits from the contextual comparison of multiple lesions on the same individual,

- By focusing on atypical lesions, the method potentially improves the detection of early melanomas, which might not stand out based on individual lesion analysis alone.

Method

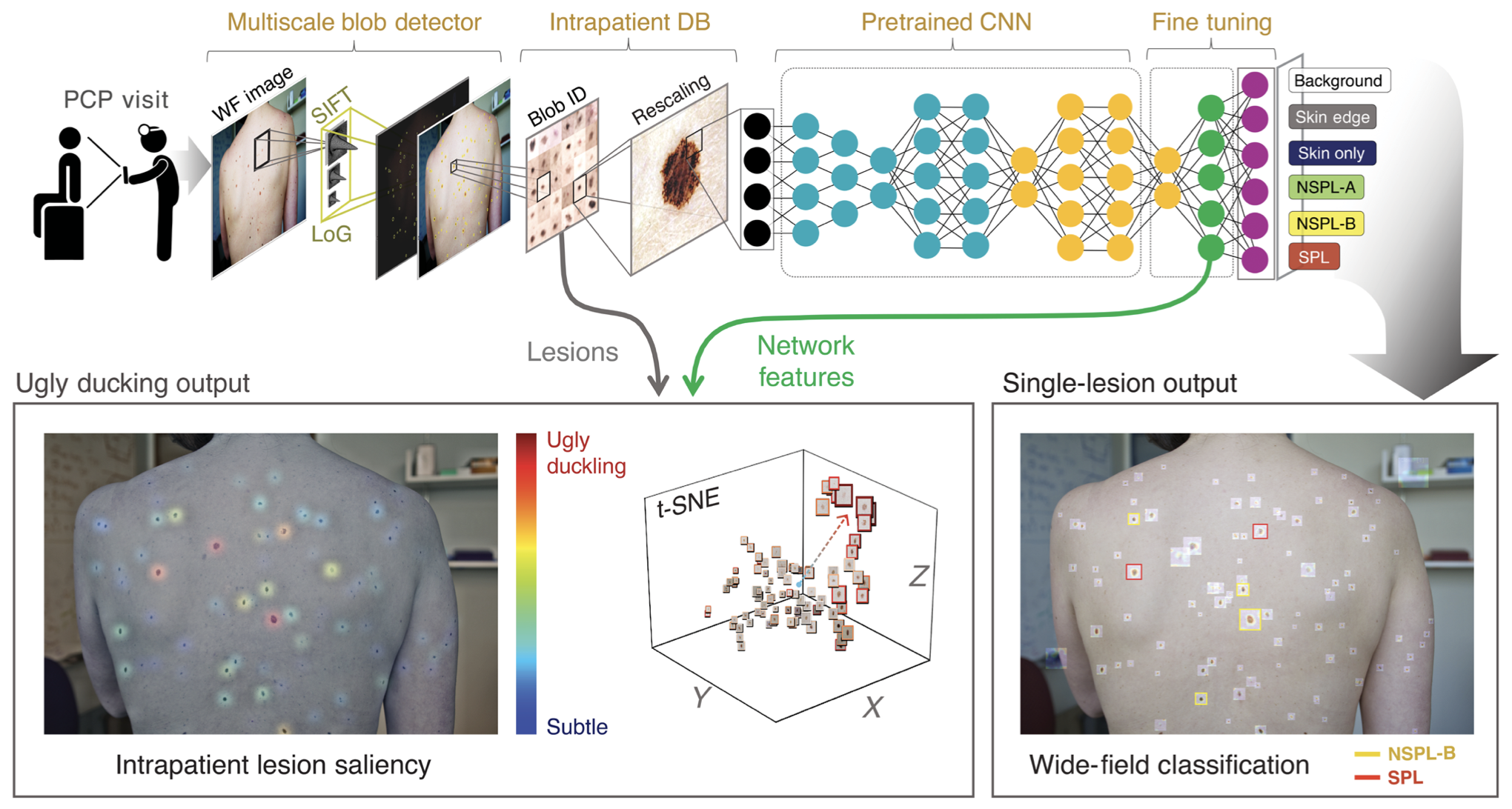

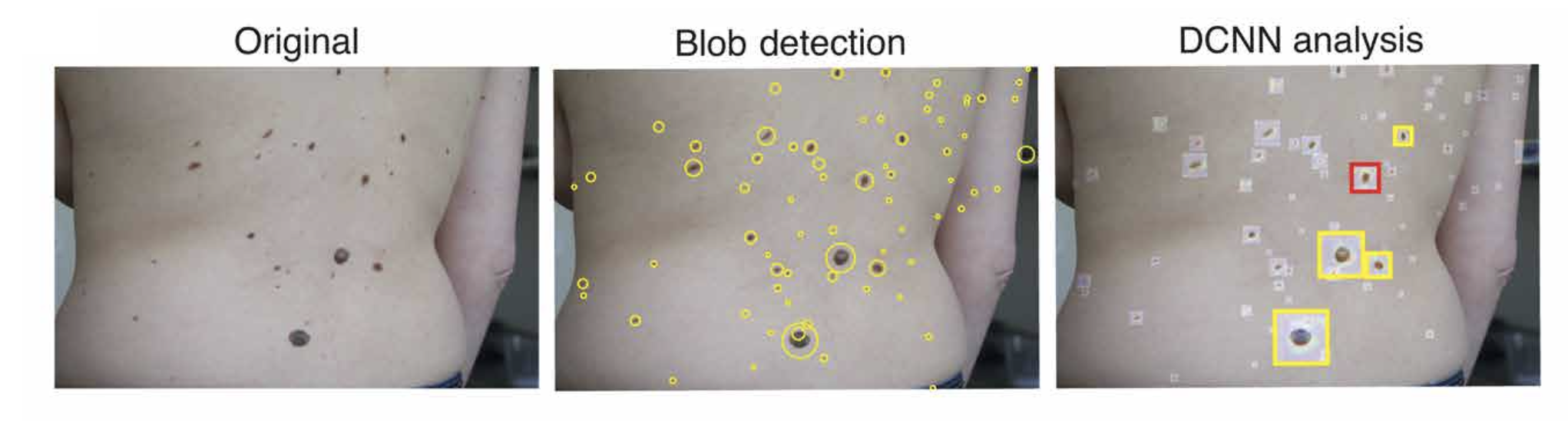

System data flow:

- A wide-field patient image is acquired by the user (or primary physician) and fed to the algorithm

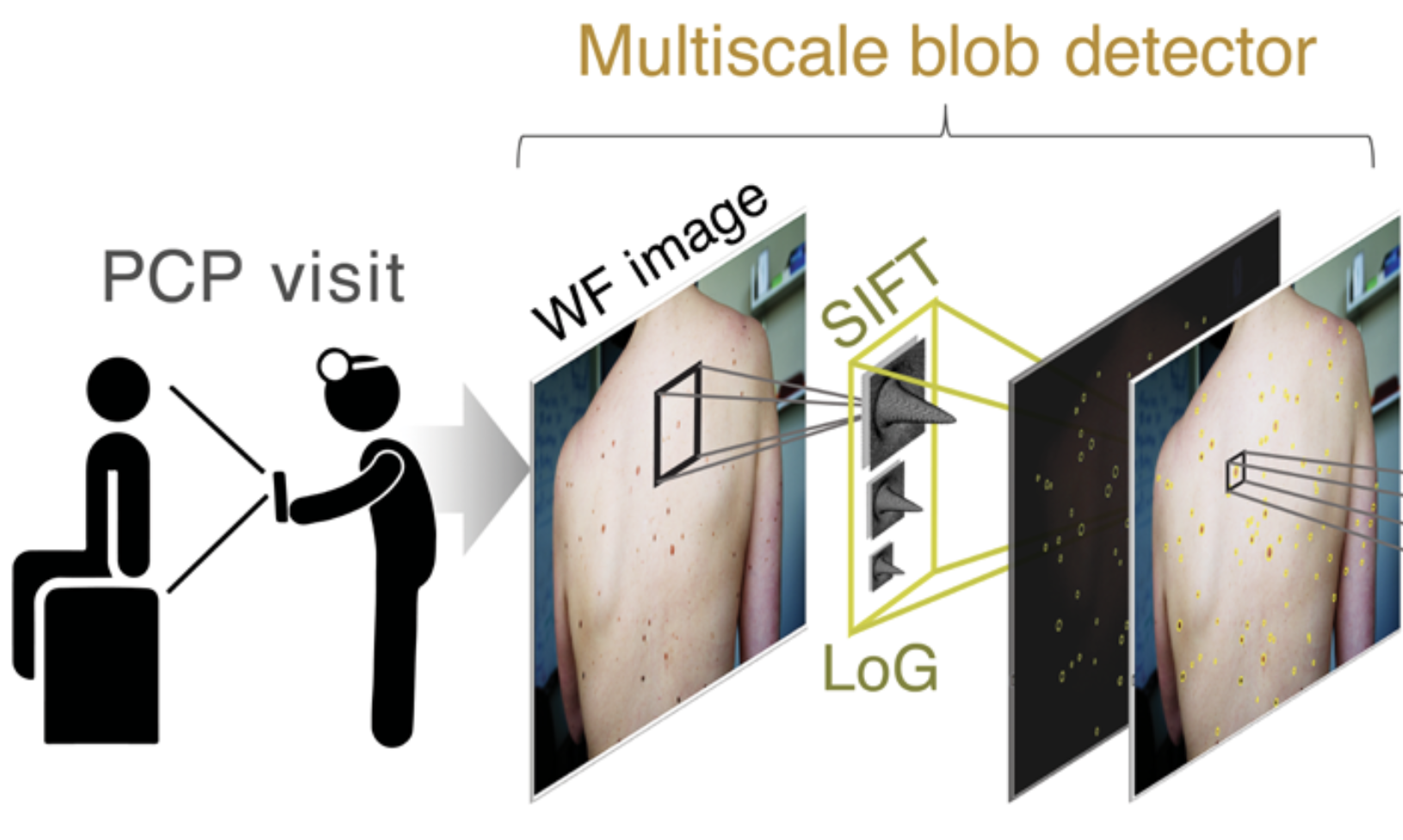

- A blob detection algorithm on the basis of laplacian of gaussians and scale-invariant feature transformation are used to detect blob-like regions

- Detected blobs are cropped and stored in an intrapatient repository

- Stored images were fed into a pre-trained deep classifier

Blob detection

The Laplacian of Gaussian (LoG) is an image processing technique that combines Gaussian smoothing with the Laplacian operator to detect edges in an image. This approach is particularly effective for identifying regions with rapid intensity change, which often correspond to edges. The Laplacian of an image highlights regions of rapid intensity change [4].

Scale-Invariant Feature Transform (SIFT) is a computer vision algorithm used to detect and describe local features in images. It was introduced by David Lowe in 1999 [5] and has become a standard method for feature detection and matching due to its robustness to changes in scale, rotation, and illumination.

Key Steps in SIFT [6]:

- Scale-Space Extrema Detection

- Keypoint Localization

- Orientation Assignment

- Keypoint Descriptor

Using both the Laplacian of Gaussian (LoG) and Scale-Invariant Feature Transform (SIFT) together can leverage the strengths of both techniques for more robust feature detection and description. Here's how you can integrate LoG and SIFT:

- LoG for Initial Keypoint Detection: Use the Laplacian of Gaussian to detect blob-like structures in the image

- SIFT for Keypoint Description: Once you have the keypoints from LoG, use SIFT to compute descriptors for these keypoints

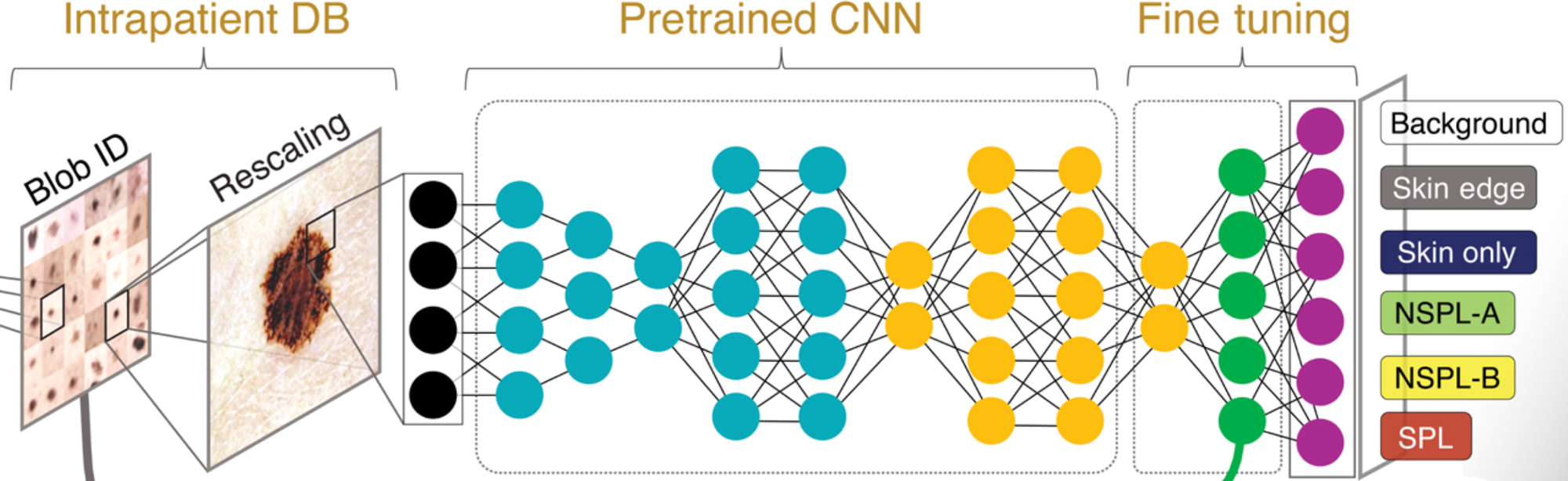

Classification

The paper describes the use of convolutional neural networks (CNNs), a type of deep learning model particularly well-suited for image analysis. The researchers designed and trained a CNN to differentiate between benign and malignant pigmented lesions.

The model is trained on a large dataset of labeled wide-field images where the ground truth (benign or malignant) is known. This dataset includes diverse examples to ensure the model learns to generalize well across different skin types and lesion characteristics.

Data

The study used a large dataset of wide-field images containing pigmented skin lesions. These images were labeled by expert dermatologists, providing a ground truth for training and validating the model. The dataset likely included a diverse range of skin types, lesion appearances, and image conditions to ensure the model could generalize well to various real-world scenarios.

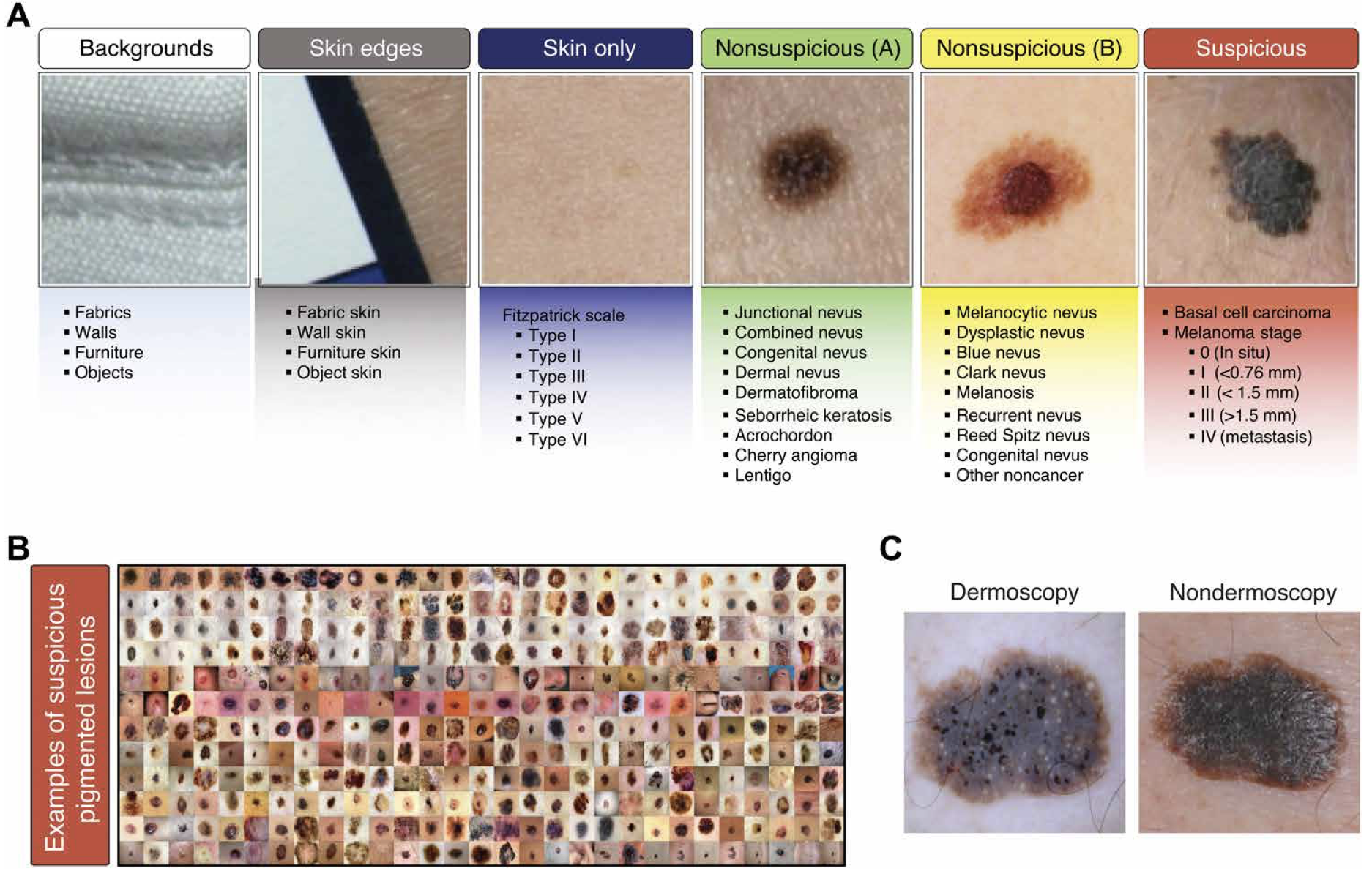

The dataset contains 33980 individually labeled and non-overlapping image crops divided into six classes:

- Backgrounds 8888 images

- Skin edges 2528 images

- Bare skin sections 10935 images

- Non-suspicious pigmented lesion type A (of low priority) 10759 images

- Non-suspicious pigmented lesion type B (of medium priority) 1110 images

- Suspicious pigmented lesion 4063 images

Results

The study found that the model's performance was on par with that of dermatologists, demonstrating high accuracy and reliability in detecting suspicious lesions.

An example wide-field image shows multiple pigmented lesions on a female subject's back. A SIFT-based blob detection algorithm identifies key points at various scales to locate and crop individual lesion images. Each rescaled single-lesion image is then analyzed using a DCNN for classification. The activation map is superimposed on the original wide-field image. Lesions classified as NSPL-B are highlighted in yellow, while those classified as SPL are indicated in red.

This figure compares intrapatient lesion rankings and ugly duckling (UD) heatmaps derived from both dermatological consensus and features extracted by a DCNN. The accompanying t-SNE graph visually depicts the clustering of all lesions within the field of view for the user.

Conclusion

In summary, the paper presents a robust method for detecting suspicious pigmented skin lesions using deep learning. The model leverages CNNs and the ugly duckling heuristic to achieve high accuracy, offering a promising tool for early melanoma detection and improved patient care. The paper shows that this technology has the potential to enhance skin cancer screening processes, providing a valuable tool for early detection and improving patient outcomes.

References

- Soenksen, L. R., Kassis, T., Conover, S. T., Marti-Fuster, B., Birkenfeld, J. S., Tucker-Schwartz, J., ... & Gray, M. L. (2021). Using deep learning for dermatologist-level detection of suspicious pigmented skin lesions from wide-field images. Science Translational Medicine, 13(581), eabb3652.

- Gogoi, U. R., Bhowmik, M. K., Saha, P., Bhattacharjee, D., & De, B. K. (2015). Facial mole detection: an approach towards face identification. Procedia Computer Science, 46, 1546-1553.

- Yu, Z., Mar, V., Eriksson, A., Chandra, S., Bonnington, P., Zhang, L., & Ge, Z. (2021). End-to-end ugly duckling sign detection for melanoma identification with transformers. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part VII 24 (pp. 176-184). Springer International Publishing.

- Raja Lini. (2023). Laplacian of Gaussian Filter (LoG) for Image Processing. https://medium.com/@rajilini/laplacian-of-gaussian-filter-log-for-image-processing-c2d1659d5d2

- Lowe, D. G. (1999, September). Object recognition from local scale-invariant features. In Proceedings of the seventh IEEE international conference on computer vision (Vol. 2, pp. 1150-1157). Ieee.

- jun94. (2020). [CV] 13. Scale-Invariant Local Feature Extraction(3): SIFT. https://medium.com/jun94-devpblog/cv-13-scale-invariant-local-feature-extraction-3-sift-315b5de72d48