Prompt Depth Anything

Latest development in Depth Map Estimation with AI.

Introduction

In recent years, the field of artificial intelligence has made significant strides in depth map estimation, a crucial component for various applications, including robotics, augmented reality, and autonomous vehicles. This blog post will summarize key insights from a recent paper on the advancements in depth map estimation AI, particularly focusing on the Prompt Depth Anything model.

Goals of Depth Map Estimation

The primary objective of this new depth map estimation is to achieve accurate scale measurements in meters, allowing for real-world distance calculations. Prompt Depth Anything model has several additional goal:

- Speed: The model aims for real-time processing capabilities, on high-performance hardware like the A100 GPU.

- Precision: Ensuring that the depth maps generated are accurate and reliable.

- Detail: The model is designed to capture fine details, even in complex scenes involving transparent objects and mirrors.

LiDAR Technology

LiDAR, which stands for Light Detection and Ranging, is a technology that measures distances by sending out a ray of light and calculating the time it takes for the light to return. This technology can generate depth maps, but it comes with its own set of challenges:

- Resolution: For instance, the LiDAR system in iPhones has a low resolution of 24 × 24 pixels, which is then upscaled by ARKit to 256 × 192 pixels using AI.

- Imprecision: There are common issues with distance accuracy, flickering, and difficulties in capturing transparent objects.

Depth map

A depth map is related to a classical image, for each pixel it indicates the distance to the camera of the corresponding point in space.

Challenges in Depth Map Computation

While there are already tools for computing depth maps from images, they often face limitations:

- Lack of Proper Scale: Many tools do not provide depth maps with accurate scaling. Relative distances are preserved but not absolute.

- Imprecision: The generated depth maps can be unreliable, particularly in complex environments with mirrors or transparent surfaces.

Advancements with Depth Anything v2

Prompt Depth Anything [1] is an extension of Depth Anything V2 [3], evolution of Depth Anything [2], itself based on MiDAS [4, 5, 6] architecture, constructed using Vision Transformer [7].

- Training on Synthetic Data: Depth Anything V2 is trained using a large teacher model (DINOv2-G) on synthetic data, which allows for perfect depth annotation.

- Auto-Annotated Data: 62 millions images are annotated using the teacher model, then a second model, Depth Anything V2, is trained based on those annotation.

Prompt Depth Anything

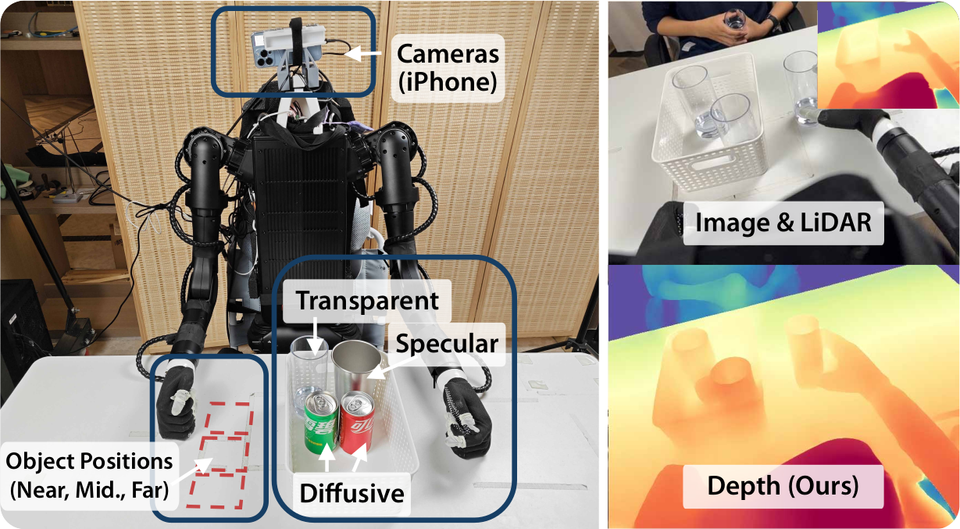

This new AI model fuse the low quality iPhone's depth map within the Depth Anything V2 model to prompt/condition up-scaling.

- Initial weights: The model weight are initialized with Depth Anything V2 weight, and zero for the new weight.

- Training data: The model is trained using a mix of synthetic data, real data and pseudo-real data.

- Loss: Use a edge-aware loss. It forces the model to predict precisely the edges, where the depth has a huge variation, it is based on the gradient.

Results

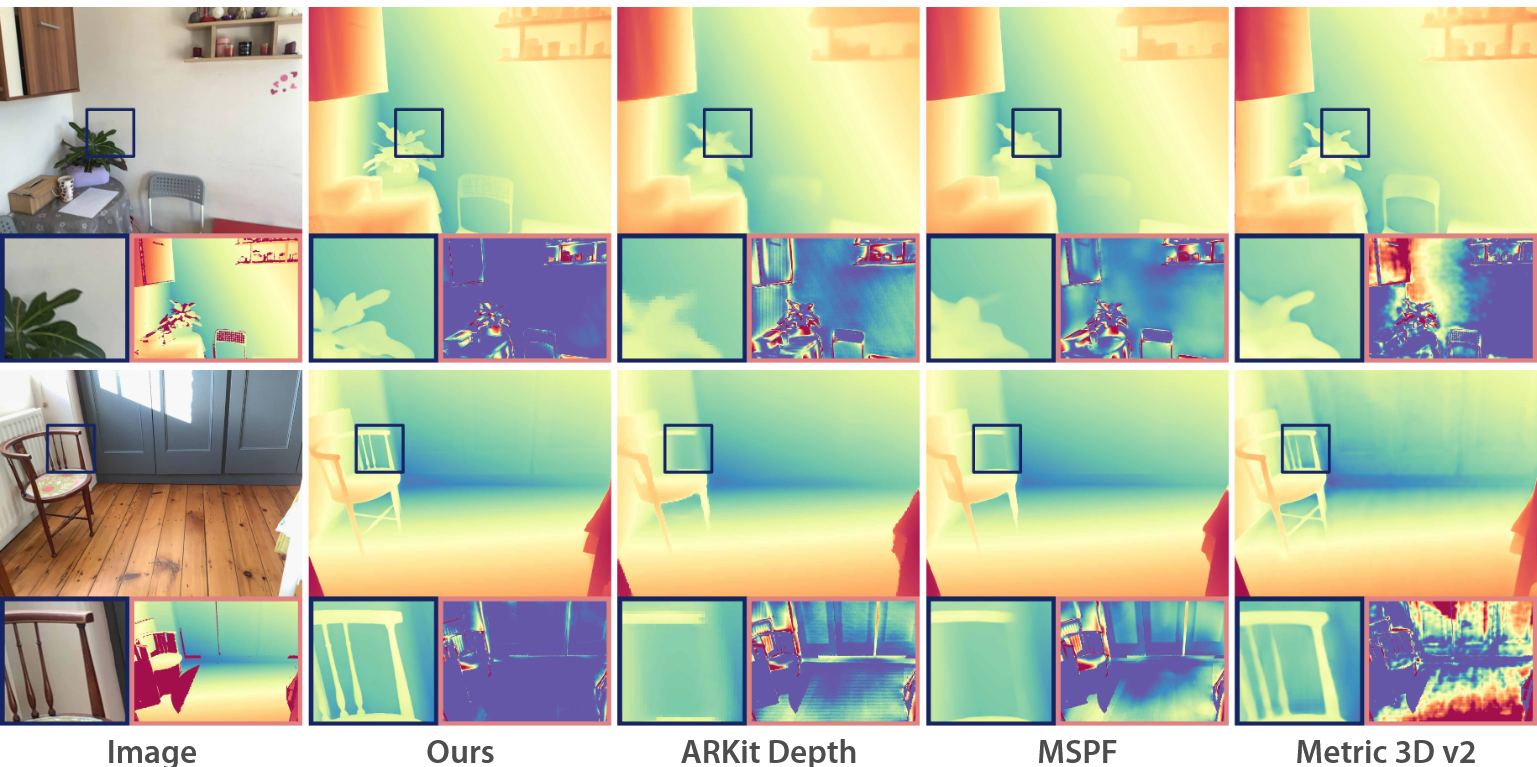

The new model performance reach state of the art for depth map for various metrics. It allows to measure rather precisely distance within an image as illustrated below.

In addition thanks to the edge-aware loss and the precise synthetic depth map, the model is able to render fine details on the depth map.

Conclusion

The advancements in depth map estimation AI, particularly through models like Prompt Depth Anything, represent a significant leap forward in the field. By addressing the challenges of speed, precision, and detail, these models pave the way for more accurate and reliable applications in various industries. As technology continues to evolve, the potential for depth map estimation in AI is boundless, promising exciting developments in the near future.

References:

- H. Lin, S. Peng, J. Chen, S. Peng, J. Sun, M. Liu, H. Bao, J. Feng, X. Zhou, B. Kang,

Prompting Depth Anything for 4K Resolution Accurate Metric Depth Estimation.

Arxiv 2412.14015 - L. Yang, B. Kang, Z. Huang, X. Xu, J. Feng, H. Zhao,

Depth anything: Unleashing the power of large-scale unlabeled data.

In CVPR, pages 10371-10381. Arxiv 2401.10891 - L. Yang, B. Kang, Z. Huang, Z. Zhao, X. Xu, J. Feng, H. Zhao,

Depth anything V2.

Arxiv 2406.09414 - R. Ranftl, K. Lasinger, D. Hafner, K. Schindler, V. Koltun,

Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer.

Arxiv 1907.01341 - R. Ranftl, A. Bochkovskiy, V. Koltun,

Vision Transformers for Dense Prediction.

Arxiv 2103.13413 - R. Birkl, D. Wofk, M. Müller,

MiDaS v3.1 -- A Model Zoo for Robust Monocular Relative Depth Estimation.

Arxiv 2307.14460 -

A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, N. Houlsby,

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale.

Arxiv 2010.11929