Unsupervised Learning in Spiking Neural Networks

Introduction

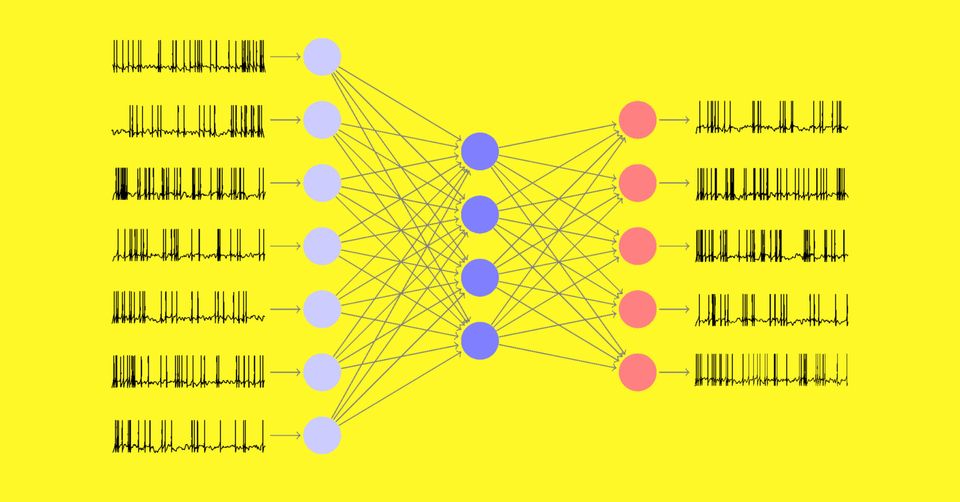

Spiking neural networks (SNNs) are computational models inspired by the biological behavior of neurons in the brain. These networks communicate through discrete events called spikes, allowing them to capture temporal dynamics and process spatiotemporal patterns. Two components of SNNs will be described here: unsupervised learning with the spike-timing-dependent plasticity (STDP) rule and leaky integrate-and-fire (LIF) neurons. We will explore the concept of unsupervised learning in SNNs and provide a detailed description of the learning mechanisms involved when using the STDP rule and LIF neurons to learn from encoded images.

Spiking Neural Networks (SNNs)

SNNs consist of spiking neurons that transmit information through spikes. Each neuron integrates input signals over time and generates a spike when its membrane potential exceeds a certain threshold. The precise timing of spikes encodes information, allowing SNNs to capture temporal patterns and process spatiotemporal data.

Spike Timing-Dependent Plasticity (STDP)

STDP is a synaptic learning rule that governs the modification of synaptic weights based on the precise timing of pre and postsynaptic spikes. The rule captures the concept of Hebbian plasticity, where "cells that fire together wire together." In other words, if a presynaptic spike consistently arrives shortly before a postsynaptic spike, the connection between the neurons is strengthened. Conversely, the connection weakens if the postsynaptic spike precedes the presynaptic spike.

The STDP rule is typically expressed as a weight update function. It assigns positive weight changes (potentiation) to pre-post-spike pairs and negative weight changes (depression) to post-pre-spike pairs. The magnitude of the weight change depends on the relative timing of the spikes and the learning rates associated with potentiation and depression. This biologically inspired rule allows SNNs to adapt their synaptic connections to the temporal patterns of input spikes.

Leaky Integrate-and-Fire (LIF) Neurons

LIF neurons are commonly used in SNNs as a simplified model of biological neurons. These neurons integrate incoming spikes over time while accounting for the leakage of their membrane potential. Once the membrane potential reaches a threshold, the neuron generates a spike and resets its potential. LIF neurons provide a computationally efficient abstraction for simulating neural behavior in SNNs.

Unsupervised Learning Mechanisms in SNNs

Let's explore the step-by-step learning mechanisms in SNNs using the STDP rule and LIF neurons when learning from encoded images:

1. Image Encoding: The input image is transformed into a sequence of spike trains, where each spike train corresponds to the activity of a specific neuron or a group of neurons. Encoding techniques may involve converting pixel intensities to spike rates or using more sophisticated encoding schemes.

2. STDP Weight Updates: The spike activity propagates through the network as the encoded image is presented to the SNN. Whenever a presynaptic spike arrives at a synapse before a postsynaptic spike, the STDP rule modifies the synaptic weight by potentiation. Conversely, if the postsynaptic spike comes before the presynaptic spike, the weight is depressed. This process allows the network to learn the temporal relationships between spikes and adjust the synaptic weights accordingly.

3. Neuron Firing and Spike Generation: LIF neurons receive weighted input spikes from presynaptic neurons. They integrate these spikes over time and update their membrane potential accordingly. If a neuron's membrane potential surpasses the threshold, it generates a spike and resets its potential. The precise timing of these spikes contributes to the learning process through STDP weight updates.

4. Iterative Learning: The steps of image encoding, STDP weight updates, and neuron firing are repeated iteratively with the same or different images. As the SNN processes multiple encoded images, the network adapts its synaptic weights based on the temporal relationships between spikes. Gradually, the network learns to recognize patterns encoded in the images and highlights them in the different weight matrices of each neuron.

Conclusion

Unsupervised learning in spiking neural networks combines the spike-timing-dependent plasticity rule with leaky integrate-and-fire neurons to enable the learning of encoded images. SNNs can capture temporal relationships and recognize patterns without requiring direct supervision through the precise timing of spikes and the adaptation of synaptic weights. This learning paradigm holds excellent potential for various applications in machine learning and neuroscience, paving the way for advancements in pattern recognition, temporal processing, and unsupervised learning.

References

[1] Deng, L. (2012). The mnist database of handwritten digit images for machine learning research. IEEE Signal Processing Magazine, 29(6), 141–142.

[2] Wang, W. et al. (2019). Computing of temporal information in spiking neural networks with reram synapses. Faraday Discuss, 213(0), 453-469.

[3] Fricker, P. et al. (2022). Event-based extraction of navigation features from unsupervised learning of optic flow patterns. 17th International Conference on Computer Vision Theory and Applications, 702-710.

[4] Paredes-Vallés, F. et al. (2020). Unsupervised learning of a hierarchical spiking neural network for optical flow estimation: from events to global motion perception. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(8), 2051-2064.

[5] Hazan, H. et al. (2018). BindsNET: a machine learning-oriented spiking neural networks library in python. Frontiers in Neuroinformatics, 12, 89.