DeepXplore: Unleashing the Power of Automated Whitebox Testing for Deep Learning Systems

https://arxiv.org/abs/1705.06640

Authors: Kexin Pei, Yinzhi Cao , Junfeng Yang, Suman Jana

Introduction

DL systems often exhibit unexpected or incorrect behaviors due to biased training data or over-fitting, which leads to disastrous consequences, as demonstrated by incidents

involving self-driving cars.

Traditional testing approaches are insufficient for systematically testing large-scale DL systems.

- Manually label as much test data as possible

- Generate synthetic training data

- Adversarial deep learning has shown that synthetic images can expose errors in DL models.

Overview of DeepXplore

DeepXplore is an automated whitebox testing framework specifically designed for systematically testing deep neural networks (DNNs). Its objective is to generate new test inputs to achieve high neuron coverage and expose different behaviors in DNNs.

Key Objectives

1. Maximize differential behaviors: helps identify diverse manifestations of underlying root causes.

2. Maximize neuron coverage: high neuron coverage alone may not induce many erroneous behaviors

Testing Methodology

The two main objectives of DeepXplore: maximizing differential behaviors and maximizing neuron coverage.

Maximizing Differential Behaviors:

Objective: Generate test inputs that induce different behaviors in tested DNNs.

Goal: Given n DNNs, modify input X (seed) so that modified input X' is classified differently by at least one of the n DNNs.

Maximizing Neuron Coverage:

Objective: Generate inputs that maximize neuron coverage.

Goal: Maximize the output of a neuron n such that the output is bigger than the neuron activation threshold.

Domain-Specific Constraints

- Test inputs must satisfy domain-specific constraints for physical realism. (e.g: Pixel values of a generated test image (x) must be within a certain range (0-255).

- Handling constraints: Use a rule-based method to ensure generated tests satisfy custom constraints.

- Modification process: Modify gradient (grad) such that the next inputs satisfy the constraints.

Experimental Evaluation

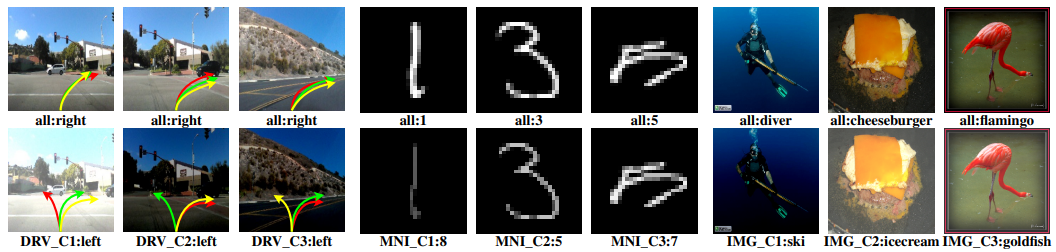

They adopt 5 popular public datasets with different types of data and then evaluate DeepXplore on 3 DNNs for each dataset:

- MNIST,

- ImageNet,

- Driving,

- Contagio/VirusTotal,

- Drebin

All the evaluated DNNs are either pretrained (i.e., they use public weights reported by previous researchers) or trained by using public real-world architectures to achieve comparable performance to that of the state-of-the-art models for the corresponding dataset. For each dataset, they used DeepXplore to test three DNNs with different architectures.

Conclusion

DeepXplore has several advantages:

* First whitebox system for systematically testing DL systems.

* New metric: neuron coverage, for measuring how many rules in a DNN are exercised by a set of inputs.

* Found thousands of erroneous behaviors in 15 state-of-the-art DNNs trained on five real-world datasets.

But also several limitations

* Subset of transformations tested

* Lack of guarantee about error absence