Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation

https://arxiv.org/abs/2208.12242

Authors: Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, Kfir Aberman

The paper was presented at the Computer Vision and Pattern Recognition Conference (CVPR) 2023 and received the Honorable Mention (Student) award.

Introduction

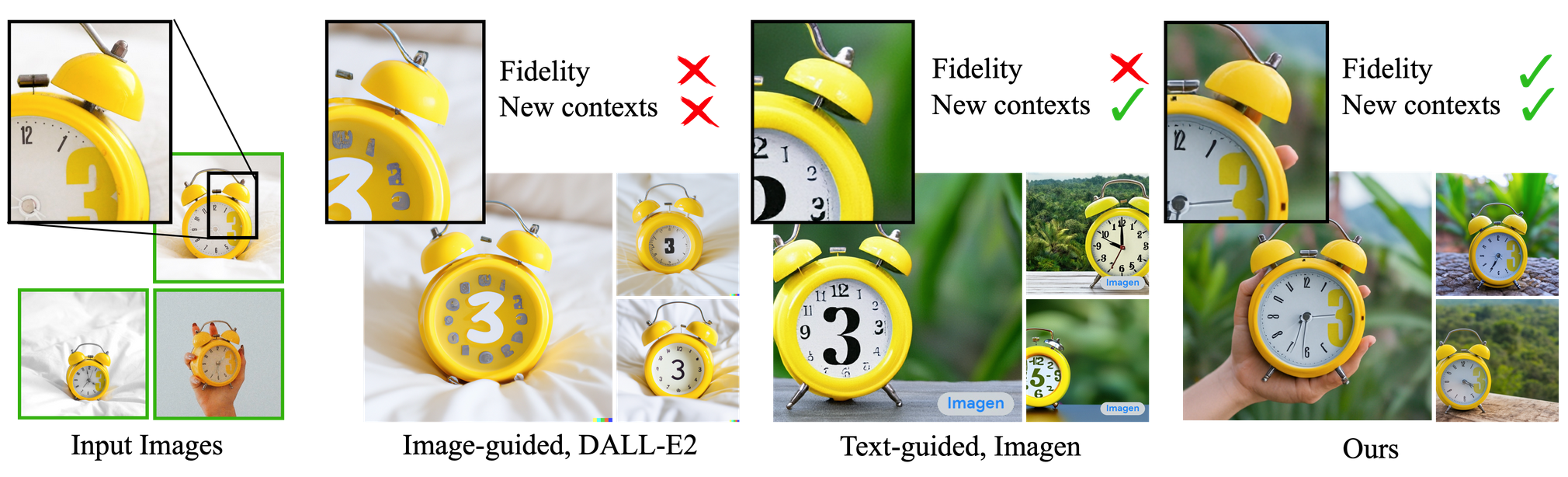

Large language models have achieved good performance on different tasks and different data types. However, they often lack in content fidelity and new context creation. These features could help to generate images in a more personalized way.

DreamBooth is a fine tuning method proposed in the article. It allows you to obtain high results while requiring a few images (typically 3-5 images) for training. As a result, you get a final image that has:

- the subject in a completely different environment - new context;

- while the subject's key features are preserved - fidelity.

Method

The main goal of the method is to increase the model’s vocabulary so that it associates new words with the subject that the user wants to see as a result. In the paper, the authors present a new approach for "personalization" of text-to-image diffusion models, such as DALL-E2 and Imagen.

Text-to-image diffusion models. It starts with the idea of diffusion and that's when you iteratively and gradually make an image noisier and noisier. And then you train a model to learn how to denoise - to get back to the original image.

What if you go back to the step of creating the noisy image and add text to this through a text encoder? Then the noisy image has a text label attached to it. Now, the denoising model can be trained to denoise the noisy image into its original representation guided by the text and then it learns to generalize text into images.

So, we can start with random noise and a different, previously unseen, piece of text and decode that. The decoding model is given a bunch of noise with the encoded text and it will attempt to decode that into an original representation. In this way, we will go from random noise plus a text prompt to create a new image.

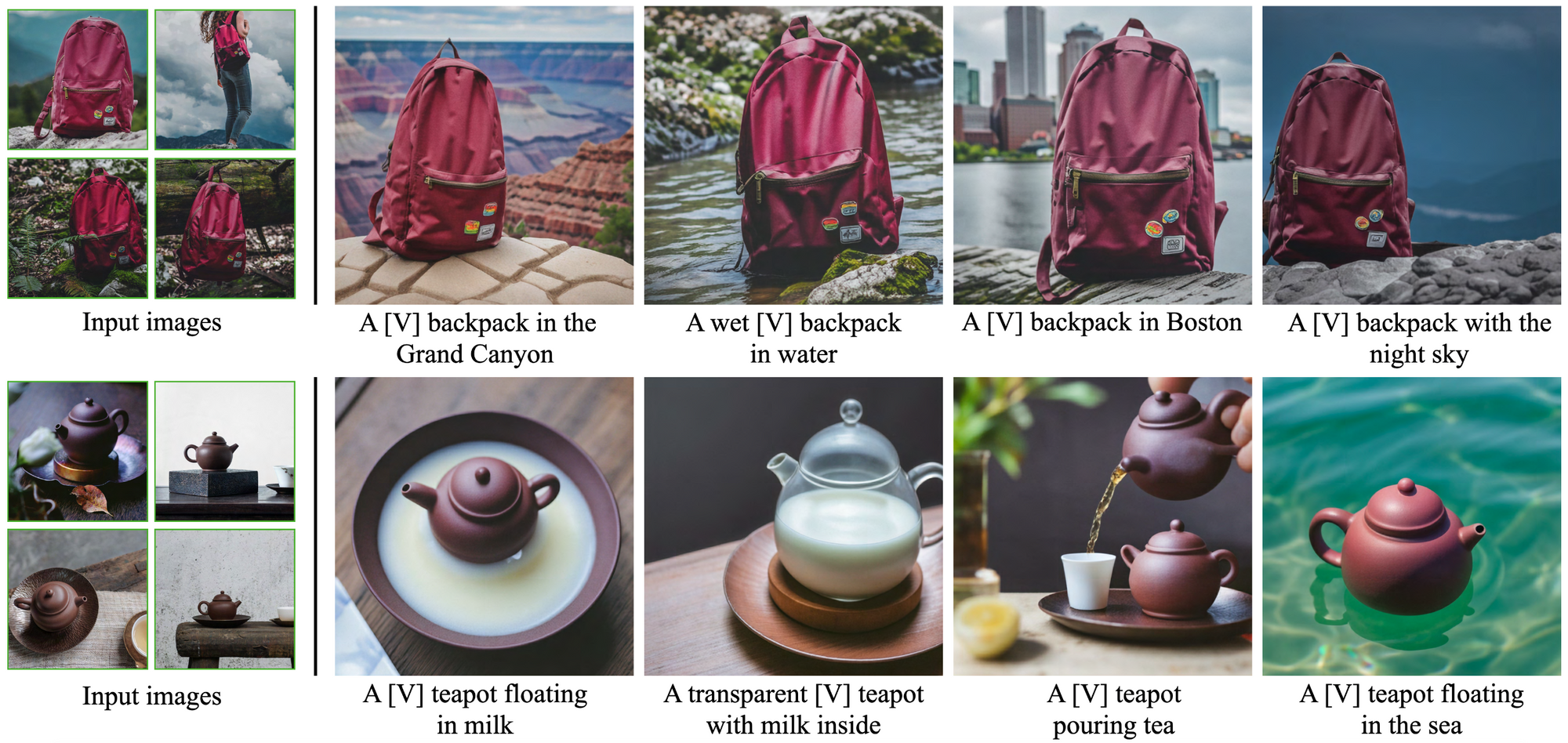

Fine tuning. Given 3-5 images of a subject, a text-to-image diffusion model is finetuned with the input images paired with a text prompt that contains a unique identifier and the class name. For example, "A [V] dog", where [V] is the unique identifier of the dog.

The idea is to add a new identifier-subject pair into the diffusion model's dictionary. In order to avoid the expense of writing detailed image descriptions for a given set of images, the authors propose a simpler approach and label all input images of the subject "a [identifier] [class noun]". The classes can be provided by the user or obtained using a classifier.

Dataset. The dataset consists of 30 subjects. It was collected by the authors. It includes unique objects and pets. 21 of the 30 subjects are objects, and 9 are live subjects/pets. They also collected 25 text prompts. For the evaluation, the authors generated four images per subject and per prompt, totalling 3000 images. The dataset is publicly available: https://github.com/google/dreambooth.

Results

The DreamBooth generation results show that it better preserves subject identity and is more faithful to prompts than stable diffusion models not fine tuned. As you can see on the picture below, it generates the subjects in different environments with high preservation of its key features.

It is important to notice that DreamBooth allows to generate the subject in new poses and from different view points with previously unseen scene composition and to have a realistic integration of the subject into the scene. Besides, given a text prompt, it can generate novel variations depending on the artistic style, while preserving subject identity.

One interesting feature of DreamBooth is the modification of the subject properties. For example, the authors show crosses between a specific chow chow dog and different animal species. In particular, we can see that the identity of the dog is well preserved even when the species changes.

Conclusion

In this blog post, we discussed a new interesting fine tuning method that takes as input a few images of a subject and the corresponding class name and returns a "personalized" text-to-image model that encodes a unique identifier that refers to the subject. Remarkably, this approach can work given only 3-5 subject images, making this technique very accessible. Various applications with animals and objects in the generated photorealistic scenes were demonstrated. In most cases, the generated images are indistinguishable from real images.

References

- Ruiz, N., Li, Y., Jampani, V., Pritch, Y., Rubinstein, M., & Aberman, K. (2023). Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 22500-22510).

- https://dreambooth.github.io

- Vahdat, A. & Kreis, K. (2022). Improving Diffusion Models as an Alternative To GANs, Part 1. https://developer.nvidia.com/blog/improving-diffusion-models-as-an-alternative-to-gans-part-1/