SAM: Segment Anything Model by Meta AI

Segment Anything (SA) project tackles the three main questions for the arduous job of segmentation

https://segment-anything.com and https://arxiv.org/pdf/2304.02643.pdf

What task will enable zero-shot generalization?

- What is the corresponding model architecture?

- What data can power this task and model?

- For the task the goal is to return a valid segmentation mask given any segmentation prompt

-“prompt” can be a set of foreground/ background points, a rough box or mask, free form text, or, in general, any information indicating what to segment in an image.

-“valid” mask simply means that even when a prompt is ambiguous and could refer to multiple objects, the output should be a reasonable mask for at least one of those objects.

- For the model they propose a classic image encoder to compute an image embedding, a CLIP prompt encoder who embeds prompts and combines the two information sources in a lightweight mask decoder that predicts segmentation masks.

- By separating SAM into an image encoder and a fast prompt encoder / mask decoder, the same image embedding can be reused with different prompts.

- And finally for the data they build a Data engine divided in three main steps:

- assisted-manual (got 4.3M masks from 120k)

- semi-automatic (got 5.9M masks in 180k images)

- fully automatic (resulting in the 11B of mask of the dataset)

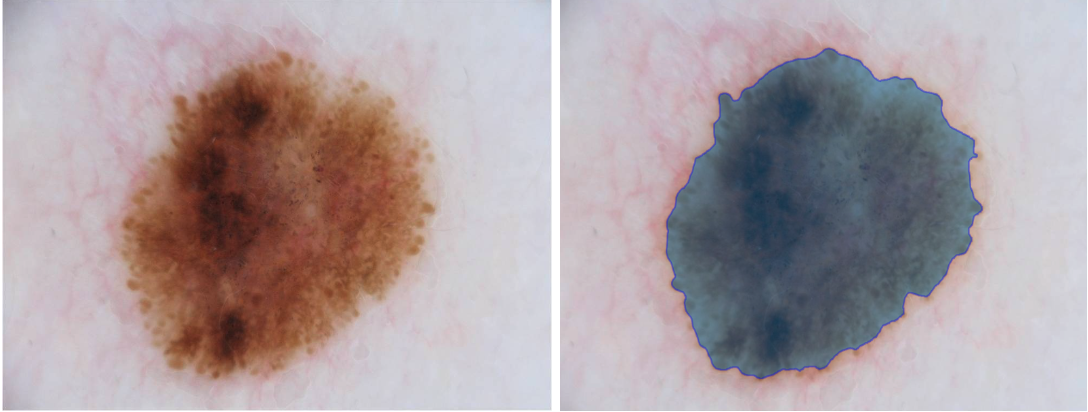

- Examples of using SAM model for Skin Cancer Lesion Detection using Segment Everything

- Examples of using SAM model for Psoriasis Lesion Segmentation using box prompt