Gradient Strikes Back: How Filtering Out High Frequencies Improves Explanations

Sabine MUZELLEC

PhD Student, CerCo – CNRS, Toulouse

September 14th, 2023, 4 PM

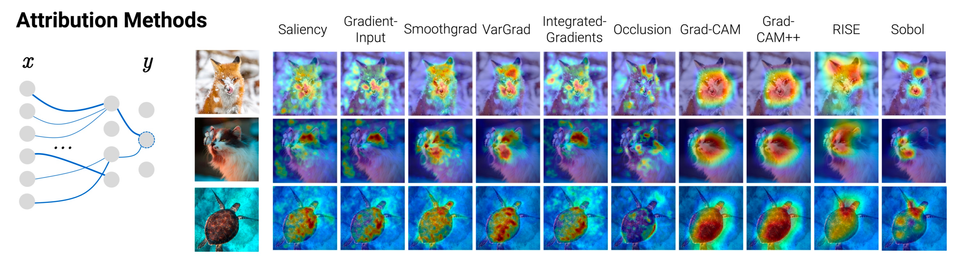

Recent years have witnessed an explosion in the development of novel prediction-based attribution methods, which have slowly been supplanting older gradient-based methods to explain the decisions of deep neural networks. However, it is still not clear why prediction-based methods outperform gradient-based ones. Here, we start with an empirical observation: these two approaches yield attribution maps with very different power spectra, with gradient-based methods revealing more high-frequency content than prediction-based methods. This observation raises multiple questions: what is the source of this high-frequency information, and does it truly reflect decisions made by the system? Lastly, why would the absence of high-frequency information in prediction-based methods yield better explainability scores along multiple metrics? We analyze the gradient of three representative visual classification models and observe that it contains noisy information emanating from high-frequencies. Furthermore, our analysis reveals that the operations used in Convolutional Neural Networks (CNNs) for downsampling appear to be a significant source of this high-frequency content -- suggesting aliasing as a possible underlying basis. We then apply an optimal low-pass filter for attribution maps and demonstrate that it improves gradient-based attribution methods. We show that (i) removing high-frequency noise yields significant improvements in the explainability scores obtained with gradient-based methods across multiple models -- leading to (ii) a novel ranking of state-of-the-art methods with gradient-based methods at the top. We believe that our results will spur renewed interest in simpler and computationally more efficient gradient-based methods for explainability.

Supplementary Materials

Paper: https://arxiv.org/abs/2307.09591

GitHub: https://github.com/deel-ai/xplique/blob/a7d5aa6675a0bf299ce7a28bc48aaaa1db780390/docs/api/attributions/forgrad.md#L4